Enterprise Applications of Generative AI – A Perspective

Generative AI has created lot of excitement in the industry and AI research community in past few months. We have seen explosion of various SaaS products and services as a result of the exposure of GPT-3 and other products from OpenAI to general public. This has allowed experts as well as average curious person to explore the possibilities and come up with new ideas of how these technologies can be used in various ways to enhance the human knowledge and capabilities. I think the business case for generative AI applications is slightly more clear from standalone consumer’s perspective than as an enterprise usage.

Enterprise environment is very different from retail in many ways. An enterprise IT eco-system operates within closed environment with lot of emphasis on information security, data flow and integration of various systems. There are multitude of ERP systems operating in tandem across various departments to fulfill the business processes, make complex transactions and provide visibility on various components which keep the business going. There are strategic and proprietary information that needs to be secure and know-how is often quite complex and contained within a small pool of talented employees. To make an AI application work securely in such an environment is a different challenge altogether.

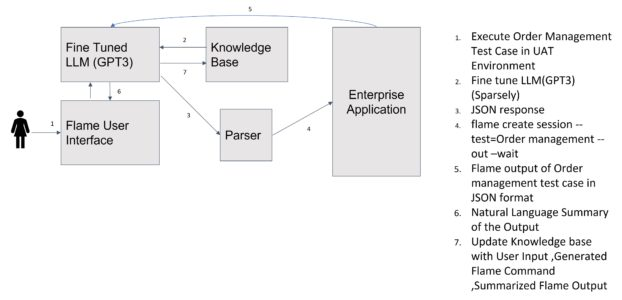

The engineering team at Atalgo is working hard to address this challenge. Under the working title of Flame, we have developed an architecture for enterprise usage of Generative AI. There are three distinct components of this architecture –

- User Interface

- Knowledge Base

- Back-end (application)

The user interface is a form of fine-tuned LLM which is trained on enterprise workflows and databases. The security and access control for this interface is a critical piece of Flame architecture. We cater for both cloud based as well as on-Prem LLM setup as part of Flame offering. We can provide granular access to different employees within the organization

Knowledge Base is the brain of this implementation. Knowledge Base contains the learnings from all the training carried out on the specific processes and data related to the organization and is proprietary to the organization. As users within the organization interact with Flame, the Knowledge Base keeps getting updated and the breadth of usage will grow. Like any other AI application, the effectiveness of Flame will depend upon how users are using the system.

The back-end consists of a parser and application database. The parser will convert the queries to an applicable CLI command (or any other formal data structure which application database can understand) and Flame engine executes the commands against the application. These commands can be descriptive (asking information and summary of various datasets) or prescriptive (asking the application to execute a business workflow).

A high level conceptual architecture of Flame is given below –

Above diagram depicts a generic approach of enterprise application using generative AI. We have got some good results on the benchmark questionnaires we had set for our demo application. In my view, one of the initial use cases for enterprise AI will be on the software development processes itself; in test automation, DevOps, Quality Engineering and Observability. Having said that, there seems huge potential for enterprise AI as we embark on more use cases across the businesses.

Contact Us

Level 1, 191 St Georges Terrace,

Perth WA 6000, Australia

Email:

contact@atalgo.com

GET EARLY ACCESS OF

OUR AI PLATFORM